Sora 2 can feel like magic when you give it the right directions. You don’t need to sound like a screenwriter or a cinematographer. You just need a simple structure, a few concrete cues, and the discipline to keep each shot focused. This guide walks you through exactly what to write, why it works, and how to fold Sora 2 into a realistic business workflow.

Quick answer: How to prompt Sora 2

Write prompts as if you’re briefing a camera team: describe the scene, tell the camera where to stand and how to move, describe two or three short beats in order, set the lighting and color, and add a short audio note or a single line of dialogue. Set duration and orientation in the settings, not in your writing. Keep casts small and actions simple so Sora 2 can generate a clean video.

What is Sora 2?

Sora 2 is OpenAI’s latest video and audio generation model. It produces short, realistic clips with synchronized dialogue, ambience, and sound effects from a plain‑language description. The iOS app and the web composer make this accessible to non‑technical teams, while advanced users can reference images to lock look and layout.

Compared to early text‑to‑video tools, Sora 2 tracks physics more believably and follows instructions more closely. That combination matters for business storytelling. When you ask for a slow push‑in on a product on a dark wood desk with warm practical lights, for example, you’ll usually get something that looks and feels like that.

How does Sora 2 work?

Think of Sora 2 as two creative partners in one: a visual brain and an audio brain. You give it a short brief. It turns your words into a checklist of goals the scene needs to meet: who’s on screen, where the camera sits, what moves, and what the moment should sound like.

Behind the scenes, a large neural network trained on lots of video, audio, and text learns the link between common phrases and the way real scenes look and sound. A planner turns your prompt into a simple timeline of beats. A generator then paints the frames, moment by moment, while a companion model creates matching sound. The system keeps picture and audio in sync by checking whether a beat, like “cup lands on marble” or “door opens,” appears on screen at the right time and then placing the corresponding sound there.

Because Sora 2 has seen many patterns of light, texture, and motion, it can infer basics like how shadows fall, how fabric moves when someone turns, or how reflections behave on glass. It does best when your instructions describe one clear shot with only a few moving parts.

Why does Sora 2 use prompts?

Natural language gives you a fast way to convey intent. You can identify the subject, the setting, the motion, and the vibe in one compact block of text. Sora 2 reads those cues and maps them to a shot with camera placement, light, color, and timing. The clearer your cues, the more reliable the results.

What a Sora 2 prompt should include

Before we jump into templates, keep one mindset: each prompt should describe one clear shot or a very short sequence. If you need more than three beats, plan multiple shots and stitch them later.

A strong Sora 2 prompt includes subject and setting, clear camera and motion, two or three sequential beats, a look and color note, and optional audio or dialogue. Use simple, observable actions and write them in the order the viewer will see them.

What is Sora 2 “looking for” from a prompt?

Sora 2 looks for concrete, film‑style cues that it can translate into a single visual idea. It responds best when you:

- Anchor who and where. Name the subject, the environment, time of day, and any standout prop.

- Specify camera. State framing and angle, then a single movement like a dolly in or a slow pan.

- Limit beats. Two or three actions that can fit inside your chosen duration.

- Define light and palette. Mention light direction or quality and name three to five palette anchors.

- Keep audio short. A quick ambience note or one short line of dialogue.

Best practices for a Sora 2 prompt

- Write for the lens, not the idea. Replace abstract words like “cinematic” with concrete choices like “wide establishing, eye level, slow push‑in.”

- Set technical items in settings. Choose model, duration, and orientation in the app or web composer. Save your prose for content.

- Favor short beats. Short actions read more faithfully than complex ones.

- Minimize moving parts. Fewer characters and slower camera moves increase realism.

- Iterate on one variable at a time. Use Remix or a small text tweak to change only lens, palette, or motion between takes.

- Use an image reference when look matters. Start from a still to lock character design, wardrobe, or layout.

Sora 2 prompt templates

Use these business‑ready templates as drop‑in starting points. Edit the bracketed items to match your brand and goal.

Template 1: Fast one‑shot product reveal

Scene – [product] on a [surface] in [location], [time of day].

Camera – [framing and angle], [one movement].

Scenes – 1) [small action, like “soft light glides across logo”] 2) [user interaction, like “hand rotates device slightly”] 3) [hero moment, like “screen wakes with subtle reflection”].

Look – [lighting description], palette [three to five colors].

Audio – [ambience or SFX].

Dialogue – [optional one line].

Example prompt

Inside a quiet design studio at dusk, a graphite laptop sits on a walnut desk. Camera: medium close, eye level, slow push‑in. Scenes: 1) a soft light sweep reveals the engraved logo 2) a hand opens the lid a few inches 3) the screen wakes with a gentle reflection on the desk. Look: warm practical lamps with cool window edge light; palette amber, graphite, walnut, slate. Audio: futuristic-sounding ambient music.

Template 2: Director’s brief for a brand vignette

Format & look – [sensor/stock vibe], [grain or texture].

Lenses – [focal lengths], [filtration].

Lighting & palette – [key direction], [fill], [three to five palette anchors].

Location & framing – [place], [framing detail].

Scenes – 0.0–[t1]: [beat 1]. [t1]–[t2]: [beat 2]. [t2]–end: [beat 3].

Sound – [ambience], [foley], [dialogue if any].

Example prompt

Format & look: modern digital with soft halation; fine grain. Lenses: 35 mm then 50 mm; light Black Pro‑Mist. Lighting & palette: soft window key from left, negative fill on right; anchors teal, sand, rust. Scenes: entry hall of a boutique hotel; start wide, then medium. Concierge places a room keycard inside of a paper sleeve on marble. The guest’s hand takes the sleeved keycard and removes it from the sleeve. Sound: classy jazz music, low room murmur.

Template 3: Talking‑head announcement with ambience

Scene – [speaker] in [setting] at [time].

Camera – [framing], [movement].

Scenes – 1) [micro gesture] 2) [headline line] 3) [closing reaction].

Look – [key and fill], palette [colors].

Audio – [ambience].

Dialogue – [Speaker: “line 1”], [Speaker: “line 2”].

Example prompt

At a windowed office in late afternoon, a product manager stands by a whiteboard. Camera: medium, eye level, slow push‑in. Scenes: 1) she glances at the board, then back to lens 2) headline line about the new feature 3) a small satisfied smile. Look: soft key from window, practical lamp fill; palette cream, navy, matte black. Audio: just dialogue. Dialogue: PM: “We’re rolling out workspace search next week.” PM: “Here’s what that means for your team.”

What a strong prompt looks like

Use the table as a checklist before you hit Generate.

| Component | What to write | Quick example |

|---|---|---|

| Scene | Who, where, when, one prop | “A barista in a cozy café at dusk with rain on the window.” |

| Camera | Framing, angle, one movement | “Medium close, eye level, slow push‑in.” |

| Beats | Two or three short actions in order | “Steam rises; customer lifts cup; they share a smile.” |

| Look & palette | Key light, fill or edge, 3–5 colors | “Soft window key left, warm practicals; amber, cream, walnut.” |

| Audio & dialogue | One ambience cue plus one short line | “Low crowd murmur; Barista: ‘Fresh pour for you.’” |

Is Sora 2 good for businesses?

Yes, with the right expectations. Sora 2 shines when you need short, high‑impact clips that feel real, read quickly on mobile, and don’t require complex blocking.

Strengths

- Fast iterations for product vignettes, recruiting teasers, and social ads.

- Realistic light and texture with simple camera moves.

- Synchronized audio for quick voice and ambience cues.

Limits to plan around

- Complex crowd scenes, rapid camera whips, and heavy physical interactions can drift.

- Brand assets like logos or protected marks require care. Keep prompts generic and composite brand elements in post.

- Continuity across many shots takes planning. Use consistent phrasing across prompts and lock looks with image references.

A quick planner table

| Scenario | Why Sora 2 fits | Risks to manage |

|---|---|---|

| 10‑second product tease | Sharp look, simple beats, quick publish | Avoid busy backgrounds that fight the hero |

| Paid social cut | Phone‑style realism, dialogue support | Keep lines very short to sync with duration |

| Recruiting teaser | People‑forward closeups, warm interiors | Reduce cast size to avoid lip‑sync drift |

| Motion background for landing page | Subtle loops with material realism | Avoid brand logos in generation; add them in edit |

Sora 2 + Visla: a clean way to finish and ship

Sora 2 is now part of Visla. You can generate AI clips with the Sora 2 model inside of Visla, then use those clips in any video project. Now, you can get the perfect b-roll footage for any video you want to make.

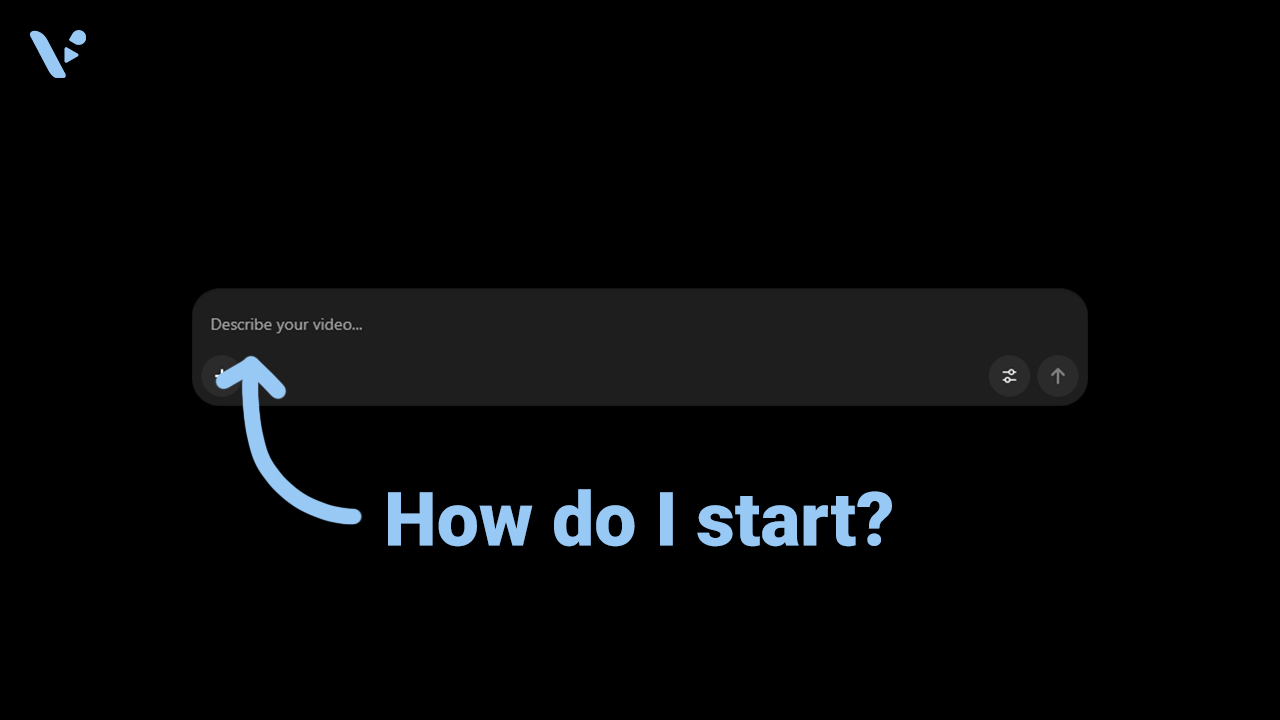

How to use Visla with Sora 2

- Prompt Sora 2 in Visla.

- Adjust the settings (duration and orientation).

- Generate your video clip. That’s it!

FAQ

Yes. Under OpenAI’s current terms, you own the outputs you create as long as you comply with applicable laws and OpenAI’s policies. You must have rights to any inputs and you may not upload or depict other people without their explicit consent; Sora’s cameo system is opt‑in and permissioned. At launch, downloads carry visible watermarks and embedded C2PA provenance, and ChatGPT Pro users can download some videos without a watermark (e.g., text‑only, not depicting public figures or third‑party cameos). To stay safe, keep brand logos and copyrighted characters out of generation and composite them in post, and document consent for any cameo talent.

In the composer, you set duration, orientation, and other parameters; today the app supports 10‑ or 15‑second clips, and Pro users on web can generate up to 25 seconds when using storyboard. Exports download as MP4, which imports cleanly into editors like Visla, Premiere Pro, CapCut, and Descript. Aspect ratio is selectable (portrait or landscape), and resolution options appear in the settings. Use landscape 16:9 for YouTube and portrait 9:16 for TikTok/Reels. If you need longer narratives, chain multiple shots with Storyboards, then stitch and caption in your editor.

Cameos require opt‑in verification and you control who can use your likeness, with revocable permissions and the ability to remove videos that include you. OpenAI has added guardrails that block public‑figure depictions in normal generations and recently tightened restrictions after criticism around historical‑figure deepfakes. Rights holders and estates can request exclusions, and OpenAI is moving toward more granular controls and opt‑out mechanisms for copyrighted IP. Practically, don’t prompt for real people or famous characters; cast fictional stand‑ins and add licensed assets in post.

May Horiuchi

May is a Content Specialist and AI Expert for Visla. She is an in-house expert on anything Visla and loves testing out different AI tools to figure out which ones are actually helpful and useful for content creators, businesses, and organizations.